- Supercomputing has become an integral part of modern scientific discovery.

- How do we know we can trust simulation results?

- Uncertainty quantification (UQ) aims to find objective grounds for confidence in models.

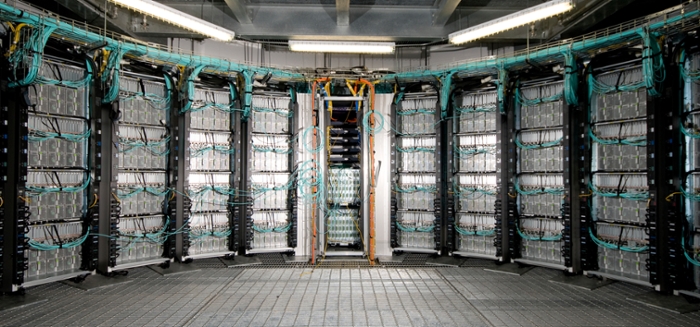

Supercomputing propels science forward in fields like climate change, precision medicine, and astrophysics. It is considered a vital part in the scientific endeavor today.

The strategy for scientific computing: Start with a trusted mathematical model, transform it into a computable form, and express the algorithm into computer code that is executed to produce a result. This result is inferred to give information about the original physical system.

In this way, computer simulations originate new claims to scientific knowledge. But when do we have evidence that claims to knowledge originating from simulation are justified? Questions like these were raised by

In many engineering applications of computer simulations, we are used to speaking about verification and validation (V&V). Verification means confirming the simulation results match the solutions to the mathematical model. Validation means confirming that the simulation represents the physical phenomenon well. In other words, V&V separates the issues of solving the equations right, versus solving the right equations.

If a published computational research reports on completing a careful V&V study, we are likely to trust the results more. But is it enough? Does it really produce reliable results, which we trust to create knowledge?

Thirty years ago, the same issues were being raised about experiments: How do we come to rationally believe in an experimental result? about the strategies that experimental scientists use to provide grounds for rational belief in experimental results. For example: confidence in an instrument increases if we can use it to get results that are expected. Or we gain confidence in an experimental result if it can be replicated with a different instrument/apparatus.

The question of whether we have evidence that claims to scientific knowledge stemming from simulation are justified is not so clear as V&V. When we compare results with other simulations, for example, simulations that used a different algorithm or a more refined model, this does not fit neatly into V&V.

And our work is not done when a simulation completes. Data requires interpretation, visualization, and analysis — all crucial for reproducibility. We usually try to summarize qualitative features of the system under study, and generalize these features to a class of similar phenomena (i.e. managing uncertainties).

The new field of uncertainty quantification (UQ) aims to give mathematical grounds for confidence in simulation results. It is a response to the complicated nature of justifying the use of simulation results to draw conclusions. UQ presupposes verification and informs validation.

Verification deals with the errors that occur when converting a continuous mathematical model into a discrete one, and then to a computer code. There are known sources of errors — truncation, round-off, partial iterative convergence — and unknown sources of errors — coding mistakes, instabilities.

Uncertainties stem from input data, modeling errors, genuine physical uncertainties, random processes — UQ is thus associated with the validation of a model. It follows that sufficient verification should be done first, before attempting validation. But is this always done in practice, and '?

Verification provides evidence that the solver is fit for purpose, but this is subject to interpretation: the idea of accuracy is linked to judgments.

Many articles discussing reproducibility in computational science place emphasis on the importance of code and data availability. But making code and data open and publicly available is not enough. To provide evidence that results from simulation are reliable requires solid V&V expertise and practice, reproducible-science methods, and carefully reporting our uncertainties and judgments.

Supercomputing research should be executed using reproducible practices, taking good care of documentation and reporting standards, including appropriate use of statistics, and providing any research objects needed to facilitate follow-on studies.

Even if the specialized computing system used in the research is not available to peers, conducting the research as if it will be reproduced increases trust and helps justify the new claims to knowledge.

Computational experiments often involve deploying precise and complex software stacks, with several layers of dependencies. Multiple details must be taken care of during compilation, setting up the computational environment, and choosing runtime options. Thus, making available the source code (with a detailed mathematical description of the algorithm) is a minimum pre-requisite for reproducibility: necessary, but not sufficient.

We also require detailed description and/or provision of:

- Dependencies

- Environment

- Automated build process

- Running scripts

- Post-processing scripts

- Secondary data generating published figures

Not only does this practice facilitate follow-on studies, removing roadblocks for building on our work, it also enables getting at the root of discrepancies if and when another researcher attempts a full replication of our study.

How far are we from achieving this practice as standard? surveyed a sample (admittedly small) of papers submitted to a supercomputing conference: only 30% of the papers provide a link to the source code, only 40% mention the compilation process, and only 30% mention the steps taken to analyze the data.

We have a long way to go.

This article has been edited from a on Feb. 14. ().